Autonomous vehicles change how crashes happen and how you seek justice after harm. Old rules for fault grew from human error at the wheel. Now software, sensors, and data shape who is responsible when something goes wrong. You face new questions. Is the driver at fault. Is the manufacturer. Is the software developer. These questions affect your medical bills, lost wages, and long term care. Lawmakers, judges, and experienced personal injury attorneys now adjust long standing ideas about negligence, product defects, and insurance coverage. They must fit these ideas to cars that think and act on their own. This blog explains how those rules shift, what protections you still have, and what you should watch if you or someone you love suffers harm in a crash with an autonomous vehicle.

From human error to shared responsibility

For many years, most crashes came from three things. Speeding. Distraction. Impairment. Law grew around one simple idea. A person made a bad choice and caused harm. You proved that choice. You proved your loss. You received payment.

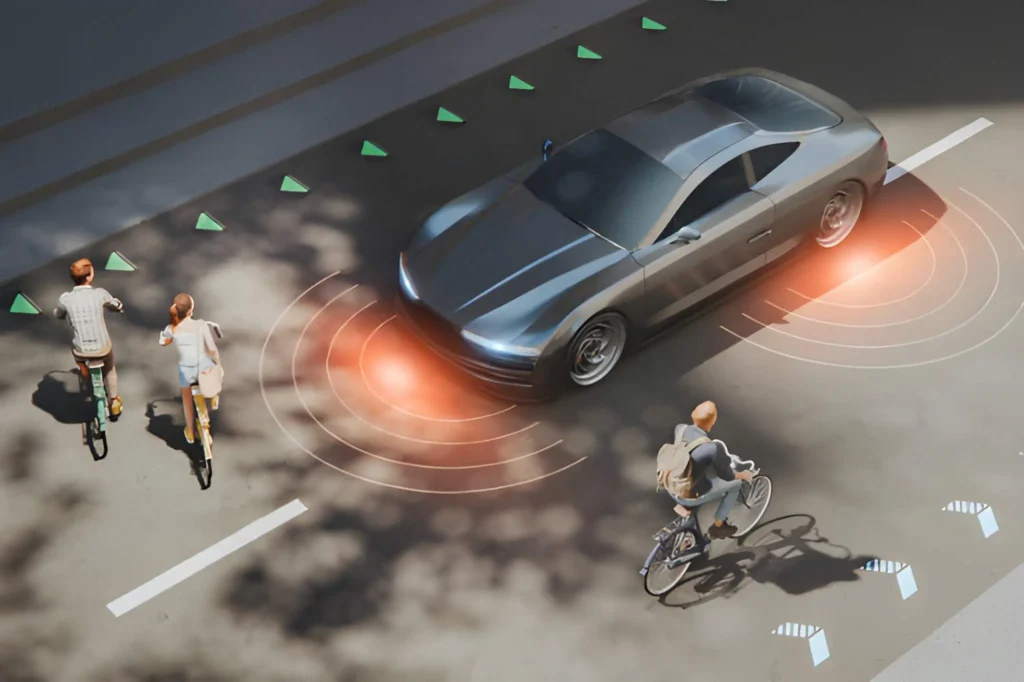

Autonomous vehicles change that story. A sensor can fail. A map can misread a road sign. A software update can change how a car reacts to a child in a crosswalk. You still face the same harm. Yet fault might rest with several people and companies at once.

Now you may need to show three things. How the human driver acted. How the vehicle worked. How the company tested and monitored the system. That mix can feel cold and confusing when you are hurt and scared.

How autonomy levels affect your case

The National Highway Traffic Safety Administration explains that vehicles fall into levels from no automation to full automation. These levels matter for your claim. They shape who had control at the time of the crash.

Automation levels and likely focus of responsibility

| Automation level | Who controls driving | Common fault questions |

|---|---|---|

| No automation | Human controls all tasks | Did the driver act with care. Did another driver break traffic rules. |

| Driver assistance | Human drives. System helps with steering or speed | Did the driver rely too much on the system. Did the system fail in a clear way. |

| Partial automation | System controls steering and speed. Human must stay ready | Did the driver stay alert. Did warnings work. Did the system handle the road as promised. |

| High automation | System drives in set conditions. Human might not need to monitor | Did the company define safe conditions. Did the car stay within those limits. |

| Full automation | System drives in all conditions | Did a design or software defect cause the crash. Did maintenance instructions protect you. |

You can read more about these levels in NHTSA guidance at https://www.nhtsa.gov/technology-innovation/automated-vehicles-safety.

New kinds of evidence after a crash

In a crash with a human driver, you often rely on three classic forms of proof. Police reports. Witness statements. Photos of the scene. Autonomous vehicles add new forms of evidence that can help or hurt your claim.

Key sources now include:

- Event data recorders that store speed, braking, and steering

- Software logs that show what the system sensed and chose to do

- Over the air update records that show when code changed

Access to this data can decide your case. Yet you may not control it. The company might store it. The insurer might hold it. You may need quick action to preserve it before it is lost or written over.

Product defects and shared fault

Personal injury law long used product defect rules for things like faulty seat belts and exploding fuel tanks. Those same rules now reach software and sensors. You might claim that the car had a poor design. Or that a safe design was built in the wrong way. Or that warnings about limits of the system were not clear.

At the same time, the driver may share fault. For example, a driver might use an automated system on a road where the maker told them not to use it. You then face a mix of human and product fault. Courts must sort out how to split blame and payment.

Insurance coverage and gaps you should watch

Insurance products grew up around human drivers. Policies assume one main question. Who drove with care. Autonomous vehicles create three new stress points for you.

- You may face delays while insurers and companies argue over fault

- You may see disputes over who must pay first. The driver policy or the maker policy

- You may lose access to fast claim tools if fault is unclear

Researchers at agencies such as the U.S. Department of Transportation study these shifts. You can follow policy updates through resources like the ITS Joint Program Office at https://its.dot.gov/automated_vehicles/index.htm.

What you should do after an autonomous vehicle crash

You can feel powerless when a machine made choices that harmed you. Yet your steps in the first hours still matter. Focus on three simple moves.

- Get medical care and keep every record

- Gather photos of the scene, the vehicles, and any visible injuries

- Ask in writing that data from the vehicle be preserved

You should also keep a journal of pain, limits at work, and changes at home. That record helps show the full weight of the crash on your life.

How the law will keep changing

Personal injury law has always moved with new machines. Courts once struggled with trains, then with airplanes, then with early airbags. Each time, rules slowly shifted to match daily life. Autonomous vehicles now force the next shift.

You can expect three coming trends. More clear rules about who owns and shares crash data. New safety standards for sensors and software. Stronger duties for companies to warn you about limits of their systems.

You do not need to track every legal change. Yet you should know this. The core promise of personal injury law stays the same. If you suffer harm because others made unsafe choices, you can seek payment and support. Autonomous vehicles do not erase that right. They only change the path you must walk to use it.